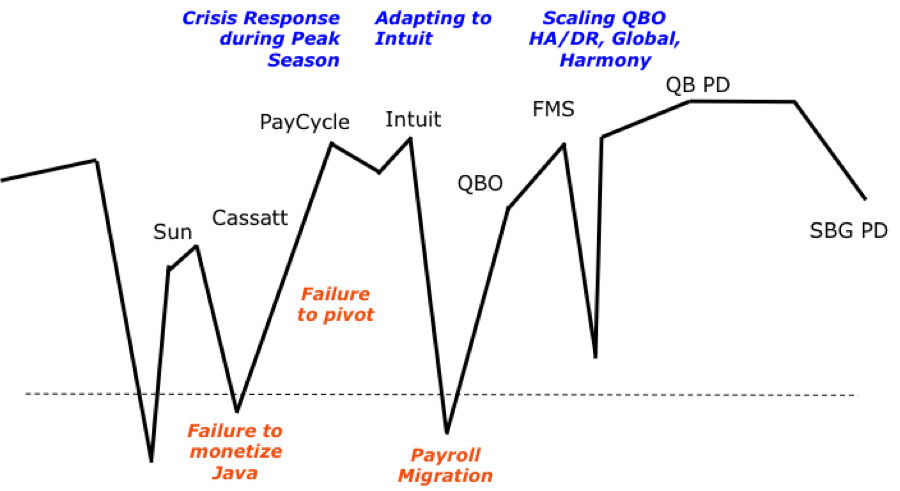

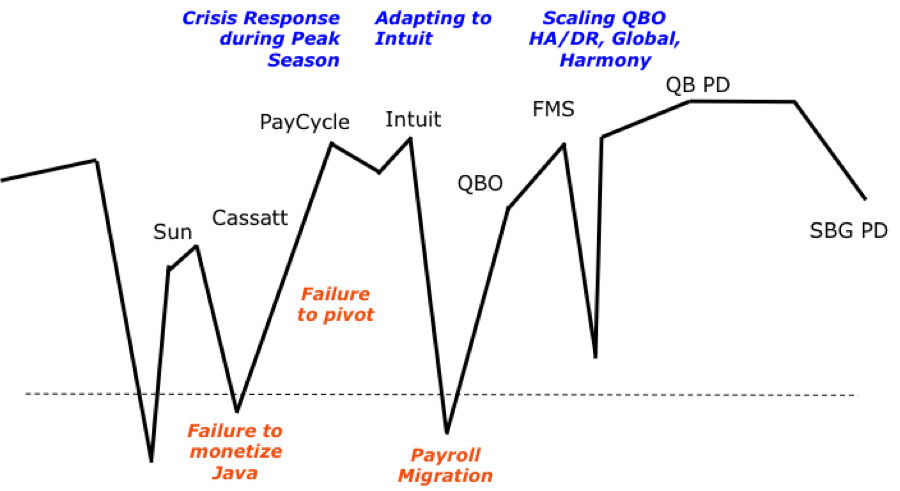

At Intuit, we use the concept of a "journey line" to share our experiences and highlight defining moments in our career and/or personal lives. These defining moments shape us as individuals and leaders, and they also provide a window into what we value and how we operate. Over the years, sharing journey lines with my teams and my peers has been a great way to understand each other’s leadership styles so that we can work better together as a team.

I would like to share three defining successes and three defining failures. The keyword here is "defining." I have chosen the moments that have significantly shaped my leadership style, who I am, and how I operate.

As a former youth basketball coach of third grade through eighth grade boys, I believe in having a playbook. Since I coached out of necessity rather than basketball acumen, I was always watching other coaches and learning from them. I shamelessly incorporated offenses, defenses, and practice routines from other coaches. Whatever worked, I used. I apply the same philosophy to management. I learn from the best around me and incorporate winning strategies into my playbook.

I also stayed true to some coaching principles. For example, I never yelled at the boys, because I would never want another father to yell at my son. I remember one coach from a Redwood City team who yelled at his team throughout the game and after the game, even after they had beaten us! We all remembered him and made sure that we beat his team in a rematch the following year-- boy, did that feel good! I also taught both of my kids golf, and I always focused on keeping it fun-- on the course and at the driving range. Golf is a tough sport, with lots of mechanics to get right. All too many times, I would see a dad criticizing his son on the driving range, trying to help correct his swing. Both father and son were frustrated, and no one was having fun. Golf is about run, right? And who has a perfect swing? We all want to get better. So, focus on the positives and gradually improve our opportunities.

With that spirit of focusing on the positives, let's start with my defining successes:

- Crisis Response during Peak Season (PayCycle, Jan 2009): act quickly and take charge during a crisis.

- Adapting to Intuit (2009-2010): lead in a positive way, respect the past, and bring people along the change journey.

- Scaling QuickBooks Online (2011-2013): build a stable foundation, set lofty but achievable goals, and partner to deliver business outcomes.

But in the spirit of "reviewing film" after a game, here are my defining failures:

- Failure to monetize Java (Sun, 2000 – 2004): Pay attention to business outcomes and drive growth for the business.

- Failed pivot and persevering on flawed strategy (Cassatt - 2007): when your strategy clearly isn't working, change your strategy! Doubling down on a bad strategy can be a recipe for disaster.

- Payroll migration (Intuit - Aug 2010): own the outcome, take charge during a crisis and react quickly, and believe in yourself.

I will share the lessons I learned from each, and how they have shaped my leadership style. Regarding failures, my philosophy is simple. Learn from the mistake, but don't repeat the same mistake! Failure to learn is not acceptable.

|

| My journey line since 1997 |

Crisis Response during Peak Season (PayCycle, Jan 2009)

Before joining PayCycle in June 2008, I had led software teams that developed enterprise software and platforms for Java, cloud computing, and data centers-- all of the key ingredients required for creating Software as a Service (SaaS) offerings. However, I had never been responsible for a SaaS offering until I joined PayCycle. With any SaaS offering, you have to plan for daily workloads as well as spikes for seasonality and other unplanned events. For payroll, January is the busiest month. Employers must send out W2's to their employees and 1099's to their contractors, and they must also file quarterly and yearly taxes. January 1 and January 31 are the peak days with traffic 5-10 times higher than normal levels.

During my first peak season, our site experienced extreme performance issues on the first Monday in January. At 10am, all of the servers were pegged. Page-load times increased exponentially from 2 seconds to 10 seconds and to 25 seconds, but only after a certain threshold in sessions. The performance issues were database bound, with massive I/O between the Network Attached Storage (NAS) and the database (DB), but very little activity between the App Servers and the DB. Working with my team, we created three parallel efforts:

- Physical I/O Capacity: That night, we installed a second shelf of storage into the NetApp to increase IOPS. Kyle, one of the systems engineers, caught the red-eye to Phoenix with the NetApp rep, and they carried a 1TB storage shelf on the plane!

- Software Throttling: Until we could identify the problem, we implemented throttling in the app so that we could turn away new users when we approached critical thresholds. Users who were already using the offering could continue without impact.

- Database Tuning: We had multiple calls with Microsoft throughout the day and had their systems engineer onsite the following morning.

By Noon on Tuesday, we had all three solutions in place, we had solved the problem, and we had identified the root cause. The query optimizer in the database server was executing bad query plans due to poor DB tuning. In November, we had added significantly more (and different) data to one of the large tables, but we had not changed the sampling frequency for updating statistics on this large table. That caused the poor query plans, which resulted in lots of I/O between the DB server and storage. By changing the sampling frequency to 50% on this table and updating the stats, we were back in business!

I survived my first live fire exercise in my new role leading a SaaS offering. Not only was the experience was a great confidence builder; it was a great way to bond with my new team. My key takeaways: act quickly and take charge during a crisis.

Adapting to Intuit (2009-2010)

My last post described my experiences and the lessons I learned after being acquired by Intuit. I won't restate them all here, but I will provide some additional insights. Every company has a unique culture. I learned to appreciate that culture and find ways to contribute in a respectful and accretive way. This goes back to my coaching philosophy. Lead in a positive way, respect the accomplishments of the past, and bring people along the change journey.

Scaling QuickBooks Online (2011-2013)

In March 2011, I was asked to the lead the Engineering team for QuickBooks Online (QBO). During my first week in the new role, QBO experienced three major outages, each lasting more than 4 hours. Although the outages were caused by human error in the data centers, the root cause for the long recovery time was a lack of scalability in the application. Luckily, I had a pretty extensive playbook from my PayCycle days, and I had been through very similar situations. However, in my new role, I had dependencies on other teams across Intuit: shared services that were used by QBO and production infrastructure (servers, networking, and storage).

- I worked with my peer, Jack Tam, who led shared services, and we developed a firefighting plan as well a long-term scalability plan. After the fires were out, Guy Taylor, who led Operations, and I developed a plan for High Availability and Disaster Recovery which included provisioning two new data centers and upgrading the entire production stack (servers, networking, and storage).

- With my Engineering team, I set an ambitious goal of improving application-tier scalability by a factor of 10. I had done some "back of the envelope" calculations and knew that this scalability was possible. Instead of setting an incremental performance goal, I set a more audacious goal, which challenged the team to think "out of the box" and find transformational opportunities. We eventually achieved a 7x scalability increase later in the year while reducing hosting costs by 50%.

Now, we had a scalable and reliable platform for QuickBooks Online. When I had joined earlier that year, QBO experienced weekly outages. In fact, Guy and I would regularly spend Fridays dealing with production issues and outages. By the end of the year, we were achieving 99.9% availability. Now that we were no longer dealing with fires, we had time to think about more transformational changes that could drive business growth.

- Partnering with Heather Kirkby, who led Product Management, and Joe Wells, an Engineering Fellow from my team, we led a pivot on QBO that eventually reshaped the entire user interface for QBO. We stopped all incremental UI development and completely overhauled the UI tech stack-- from server-side Java UI to a rich-client with JavaScript and CSS. This work evolved into a new design effort for the QBO UI.

- Working with Vishy Ranganath, who led the QBO team in Bangalore, and Anshu Verma, an architect in Vishy's team, we delivered QBO worldwide with support for 10 languages, 40 currencies, and global accounting standards.

Today, QuickBooks Online is one of Intuit's flagship offerings, and QBO is driving growth across Intuit's small business ecosystem. My key takeaways: build a stable foundation, set lofty but achievable goals, and partnership is key to delivering outcomes.

Failure to Monetize Java (Sun, 2000 – 2004)

Now, let's talk about my key failures and what I learned from each one. During my five years at Sun, I helped create the Java platform. I led teams that contributed to Java 2 Enterprise Edition (J2EE) 1.3, J2EE 1.4, the Java Web Services developer pack, and the Java and XML (JAX*) technologies. We changed the way the world creates applications, and the Java platform is still one of the predominant development platforms. However, we failed to make money from Java for Sun. The Java group at Sun built a great platform, but every other J2EE partner enjoyed more monetary success than Sun's J2EE application server product line. When Linux and x86 servers disrupted Sun's high-margin Solaris and Sparc hardware businesses, the software group could not fill the gap on revenue and profit. Sun's top line revenue and profitability began to erode, and Sun never recovered from that decline.

This failure became readily apparent when Oracle acquired BEA for $8.4B and then acquired Sun a year later for $7.4B. At its heyday, Sun had over $16B in annual revenue and a $100B market cap. Although BEA was the most success J2EE application server vendor, it only had $2B in annual revenue and a much smaller market cap than Sun's. Every time I pass by the Facebook campus in Menlo Park-- the former Sun campus-- I'm painfully reminded of this failure. Could I have done more to monetize Java? During my days at Sun, I focused primarily on the technology and platform, and I didn't lean into the business.

My takeaways: Pay attention to business outcomes and drive growth for the business.

Failed pivot and persevering on flawed strategy (Cassatt: 2007)

In late 2004, I joined Cassatt, a well-capitalized cloud computing startup. Bill Coleman, former CEO of BEA, was at the helm of Cassatt, and he was a prolific fundraiser. Cassatt was an early pioneer in cloud computing, at a time when it was called utility computing. Cassatt followed a classic enterprise sales model, with a 9-12 month sales cycle that started with a POC and resulted in a 7-figure enterprise deal. Our direct sales force tried to convince companies to transform their IT shops into private clouds that were managed by Cassatt software. We were early pioneers in cloud infrastructure, and even pitched our technology to Amazon when they were building EC2 (the precursor to AWS). Unfortunately we never achieved a scalable business model. Each year, we signed a new 7-figure deal but continued to burn $1-$2M per month.

Gamiel Gran, who led business development, and I led an effort to develop a power-management appliance that relied on a viral sales model with a much shorter sales cycle. We leveraged the IP and existing software to focus on power management for departmental labs and server closets. Unfortunately, we were not successful in pivoting the company to this approach. Instead, the company doubled down on an even more elaborate and all-encompassing cloud-computing platform. After three and a half years at Cassatt, I joined PayCycle. A year later both companies were acquired, and the deals were announced on June 2. Intuit acquired PayCycle for $170M, and CA acquired Cassatt for less than $10M. Cassatt had raised over $150M in funding over the years, and every investor was wiped out.

My takeaways: when your strategy clearly isn't working, it's time to change strategies! Doubling down on a bad strategy can be a recipe for disaster.

Payroll migration (Intuit - Aug 2010)

I alluded to this failure in

my previous post, but I will provide more of the gory details here. After Intuit acquired PayCycle, PayCycle became the go-forward platform for Intuit Online Payroll (IOP), and we planned to migrate customers from the former payroll offerings to the PayCycle platform over the following year. Over the course of 9 months, we migrated the 60K standalone IOP customers to the new PayCycle offering without any issues, other than concerns over price increases. The next 60K payroll customers were using a payroll solution that was tightly integrated with QuickBooks Online-- and that's where all hell broke loose.

We had known about feature differences between the former Intuit and the new PayCycle payroll offerings, but migrated customers on the standalone offering did not complain about the missing features. However, the QBO-Payroll customers complained quickly after migration, and their reaction was quite vocal. They called in droves to customer care for help with the migration, which resulted in long wait times for customers on the phone as well as long handle times. And customers also complained on social media and community forums.

I made two key mistakes here. First, I was lulled into a false sense of security from the standalone payroll migration. Even though early indicators from the QBO payroll migration showed signs of customer frustration with the feature gaps, I ignored those early indicators. The migration was a long project, and I wanted to finish. Everyone wanted to be done. So we "ripped off the bandaid" and migrated 60K customers in 30 days. The other mistake was not reacting quickly. I waited two weeks before mobilizing the team to address the most critical feature gaps. In the meantime, we continued the migrations. The right call would have been to stop the migrations, address the customer concerns, and then resume the migrations.

My key takeaways from this experience:

- Own the outcome: Take charge during a crisis & react quickly. Had this been a production issue or outage, I would have reacted quickly. However, this situation was an entirely new experience for me-- a "feature outage" or product gap.

- Believe in yourself: It gets real lonely when things are bad. I led the remediation effort and product work to address the gaps, and at times it felt like I was in Siberia. However, you can't let one mistake-- no matter how large-- stop you from moving forward. Even after striking out in baseball, you will still have many at-bats in the remaining innings; and great baseball players only get on base 30% of the time. Pick yourself up, dust yourself off, and get back in the game.

I updated my playbook with the lessons I learned from this particular failure. Since then, I've been responsible for two product migrations, and they've gone much smoother. I applied everything I learned from this one and had contingency plans in place. "What doesn't kill you, makes you stronger." I hope you've learned something about me and have learned from my same mistakes.